Where can I find additional information?

For further detailed information refer to our manuscript:

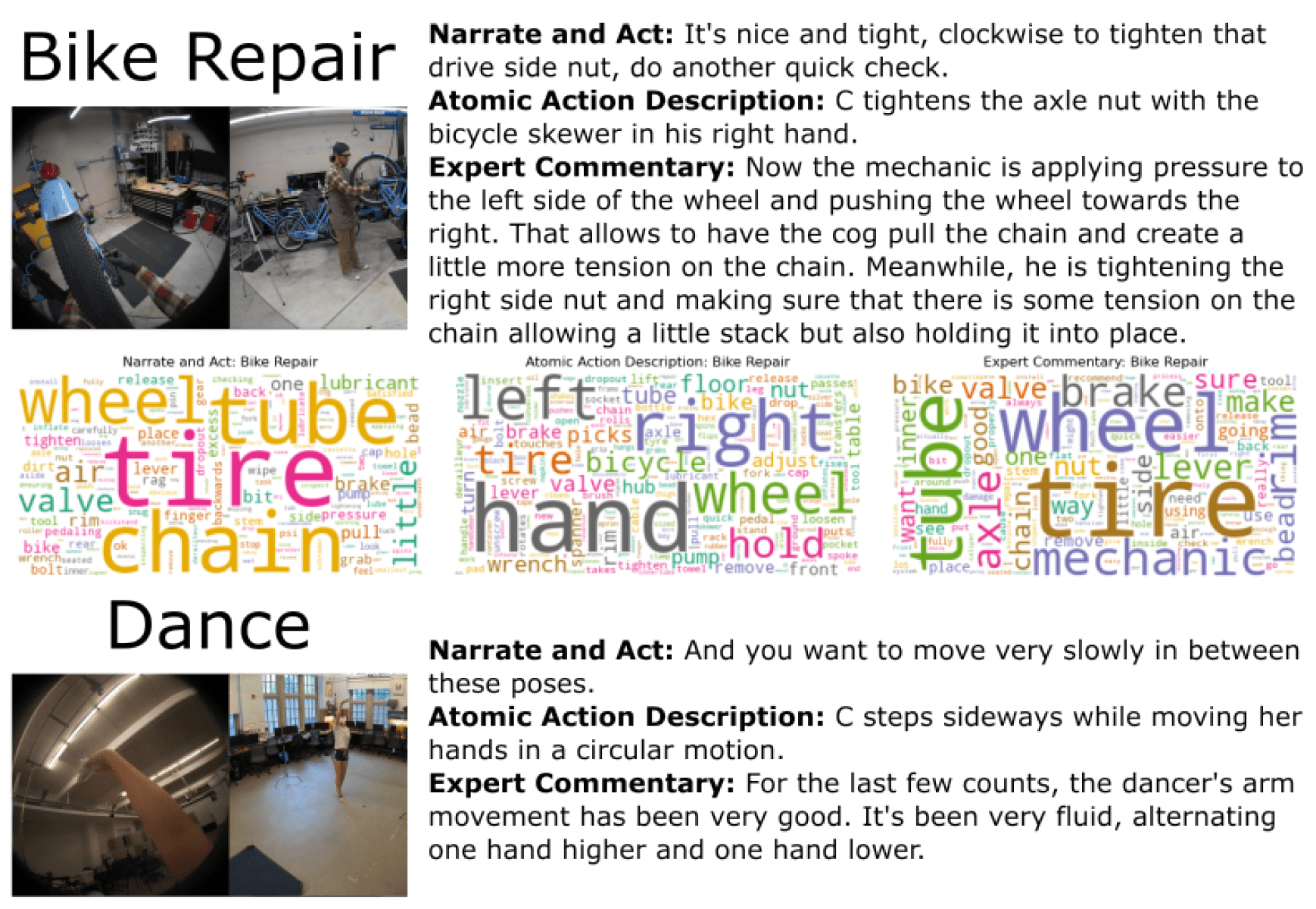

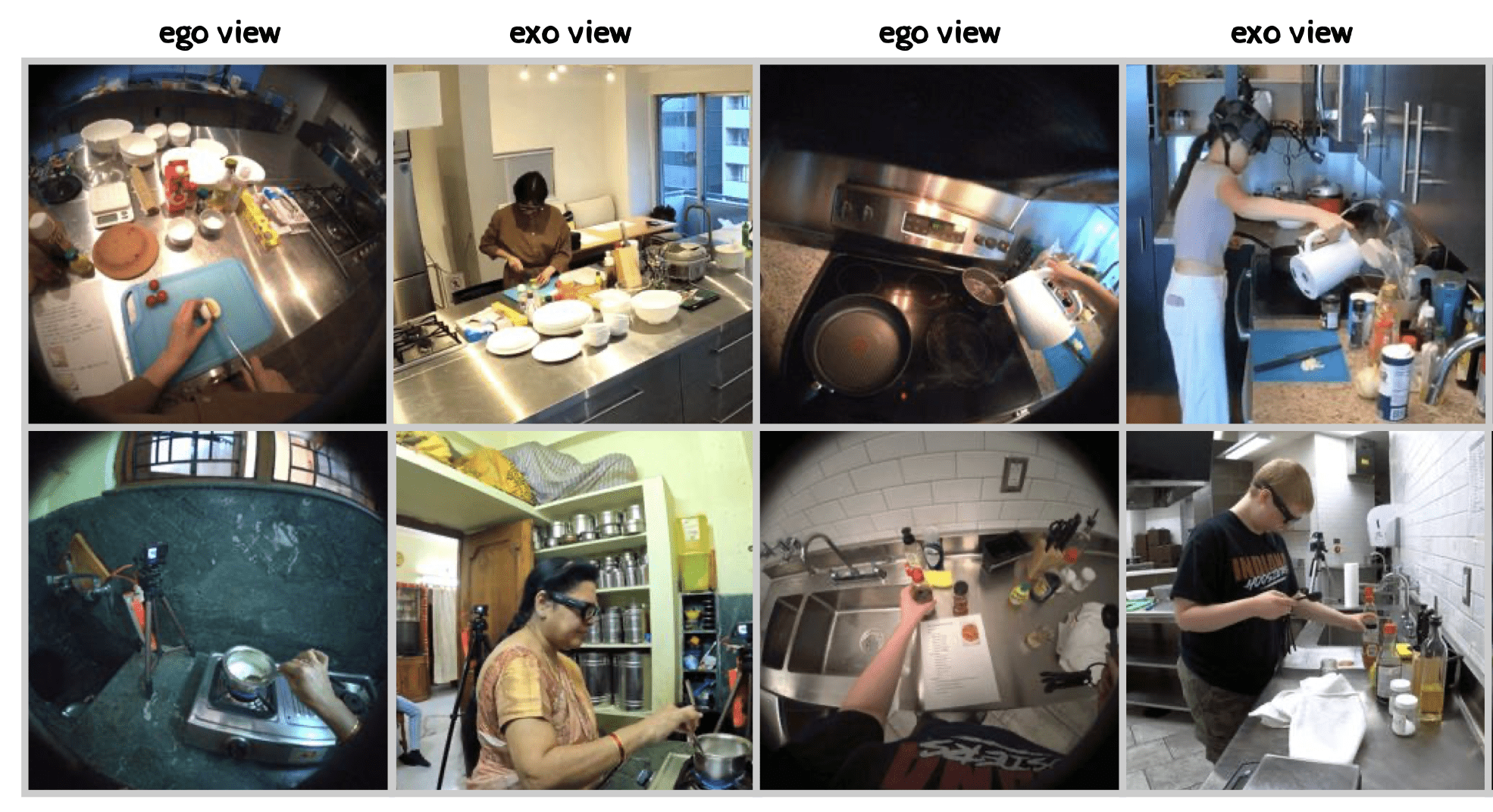

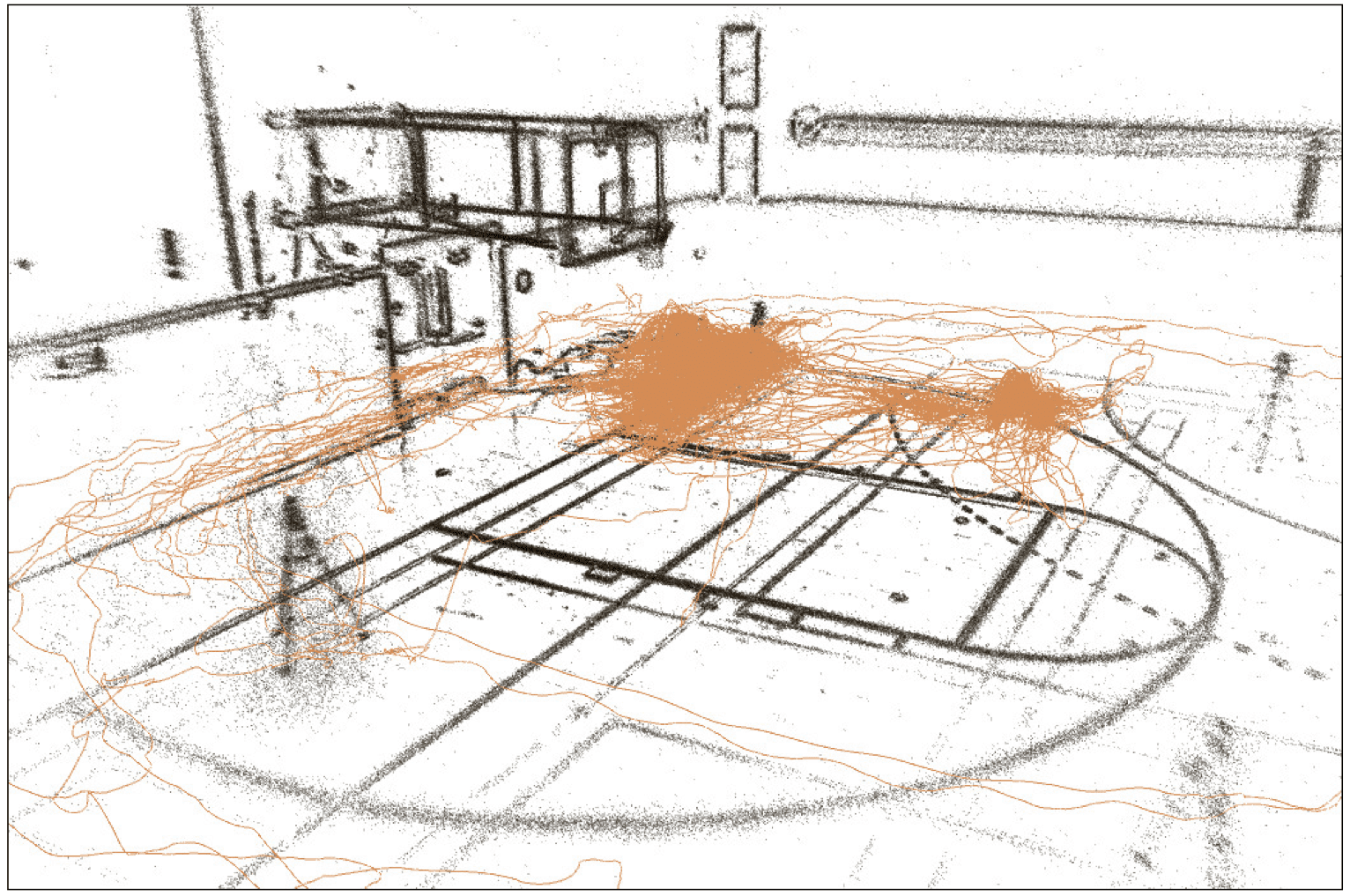

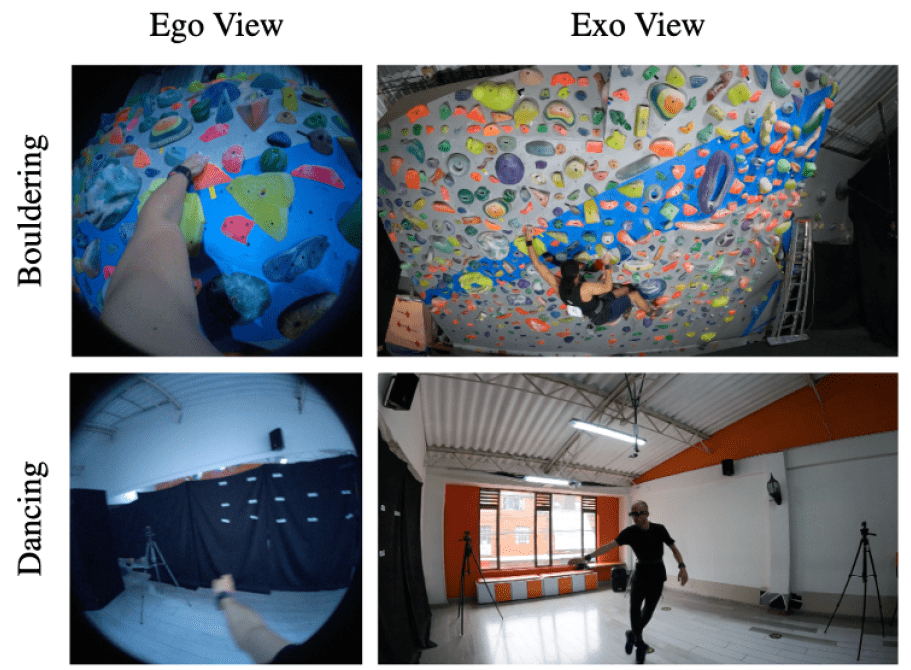

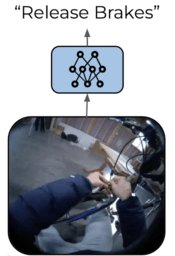

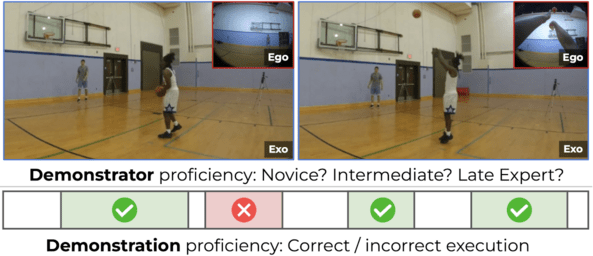

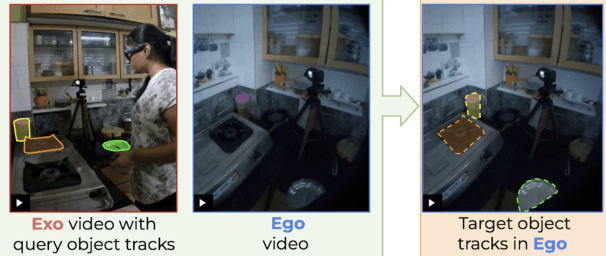

Journal Version. K Grauman et al (2025). Ego-Exo4D: Understanding Skilled Human

Activity from First- and Third-Person Perspectives. International Journal of Computer Vision. Open Access | PDF

K Grauman et al (2024). Ego-Exo4D: Understanding Skilled Human

Activity from First- and Third-Person Perspectives. IEEE/CVF Computer Vision and Pattern Recognition (CVPR).

| Supp Material

|

ArXiv paper